A method for object identification was developed as part of an algorithm to select a landing location for a spacecraft approaching the surface of the Moon. Image: NASA, ISS

A method for object identification was developed as part of an algorithm to select a landing location for a spacecraft approaching the surface of the Moon. Image: NASA, ISS

If you’ve ever watched a moon landing on TV and wondered how it was able to happen, you may or may not have thought about where the spacecraft should actually land. In truth, small surface features in a landing location, such as rocks, craters and fissures, can cause significant damage to a small landing craft. Despite this, the limited resolution of satellite imagery means that these features cannot be detected from Earth. But what if there was a way for the spacecraft to decide where it could safely land as it approached the moon?

Now, researchers from the University of Cincinnati have developed an optical imaging algorithm that can select a landing site for a spacecraft as it approaches the moon.

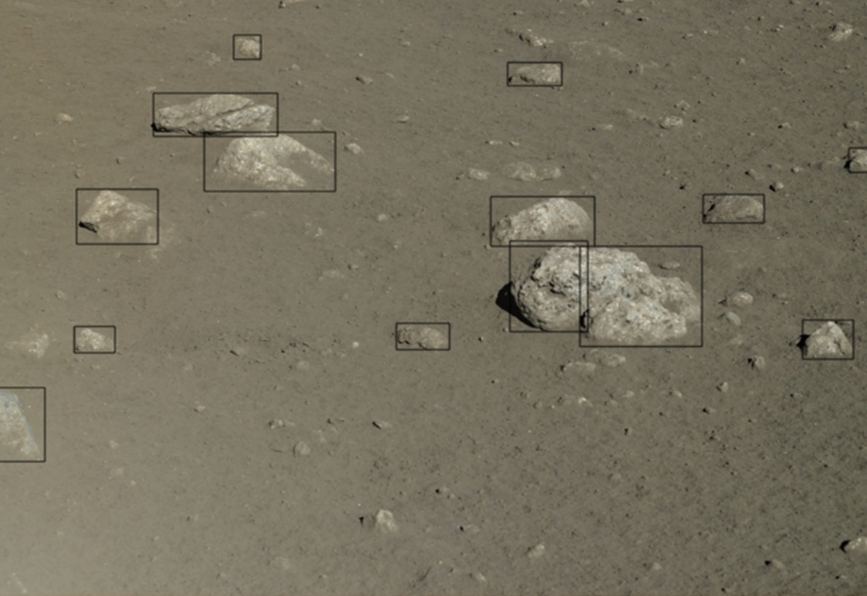

Fig 1: Example of a Labeled Image from the CNSA dataset.

Fig 1: Example of a Labeled Image from the CNSA dataset.

In the past, NASA has experimented with using LIDAR, a method of using lasers to generate high resolution 3D images, to provide detailed information about the landing surface, including an elevation map and information about large rocks and crater slopes. However, many smaller spacecraft do not have the power and mass resources to dedicate to a LIDAR system. Instead, a system that takes normal photographs would be cheaper and more efficient to implement, and could be reused for science-related imagery.

To train the model to make accurate predictions, the researchers used lunar images that were taken from China National Space Administration’s Yutu-1 moon rover, which operated between 2013 and 2016. The rocks and debris in these training images were labeled so that the imaging algorithm can learn to make its own predictions on similar images.

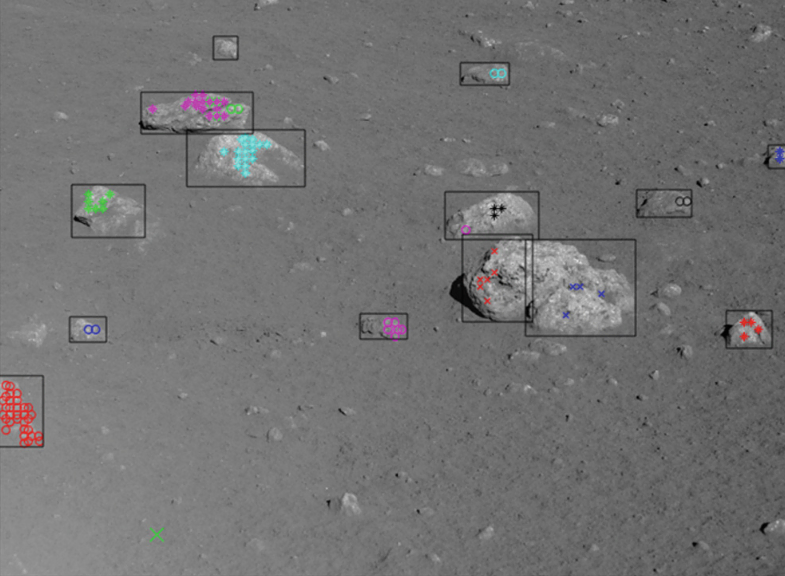

The architecture of the algorithm was designed with the primary objective of selecting the best landing location and with a secondary objective of doing so in a computationally efficient manner. In order to achieve this, the image size is firstly reduced to a manageable level. Next, the algorithm begins grouping the individual pixels in the image into objects based on their proximity to one another and similar levels of intensity. Then, each object is classified into one of the following four types: sky, shadow, rock or open area. Finally, each open area is scored based on its size and proximity to undesirable objects, and the landing location with the highest score is chosen.

Fig 2: Identified Rock Pixels for Image #3 in CNSA database.

Fig 2: Identified Rock Pixels for Image #3 in CNSA database.

The results of the study showed that the model correctly classified 80% of all labeled rocks and found an acceptable landing site in 72% of the images. The researchers considered this an acceptable result for an algorithm in the early stages of development.

However, the algorithm seems to struggle with classifying small rocks that do not contrast well with the background. In addition, these results were generated on a standard laptop with uncompiled code, where some of the images took up to 5 minutes to process. If researchers are able to work around these problems, the algorithm could become viable to use on actual spacecraft in the future.

Although it remains to be seen whether the approach taken in this algorithm can compare to traditional machine learning methods, if this algorithm can perform at a comparable level, we might see space agencies use this technology on moon landers in the near future.